What Truly Amazed Me in 40 Years of Computing

Experiences with computers over the last 40 years that made me say "Wow". Most changes in tech are incremental, but certain things just surprised me!

It’s been exactly 30 years since I packed my bags and relocated to Canada. I completed my undergrad in Computer Science in New Zealand, but was encouraged to attend graduate school in another country. This three-decade milestone got me thinking about the technical advances in my lifetime, dating back to 1982 when my family purchased a home computer.

Computer technology has dramatically changed, but there’s only a small list of things that really blew my mind. Most changes were incremental, building on top of existing ideas I’d already seen. Sure, the numbers keep on getting larger - CPU speeds and RAM sizes increased by a factor of millions - but that wasn’t too surprising, nor exciting.

But, what was exciting? What were the things that made my jaw drop when I saw them? Let’s go from 1982 to 2023, looking at those milestone events and think about why they were so meaningful. Of course, in 2023 many of these technologies seem old and boring, but at the time they were amazing!

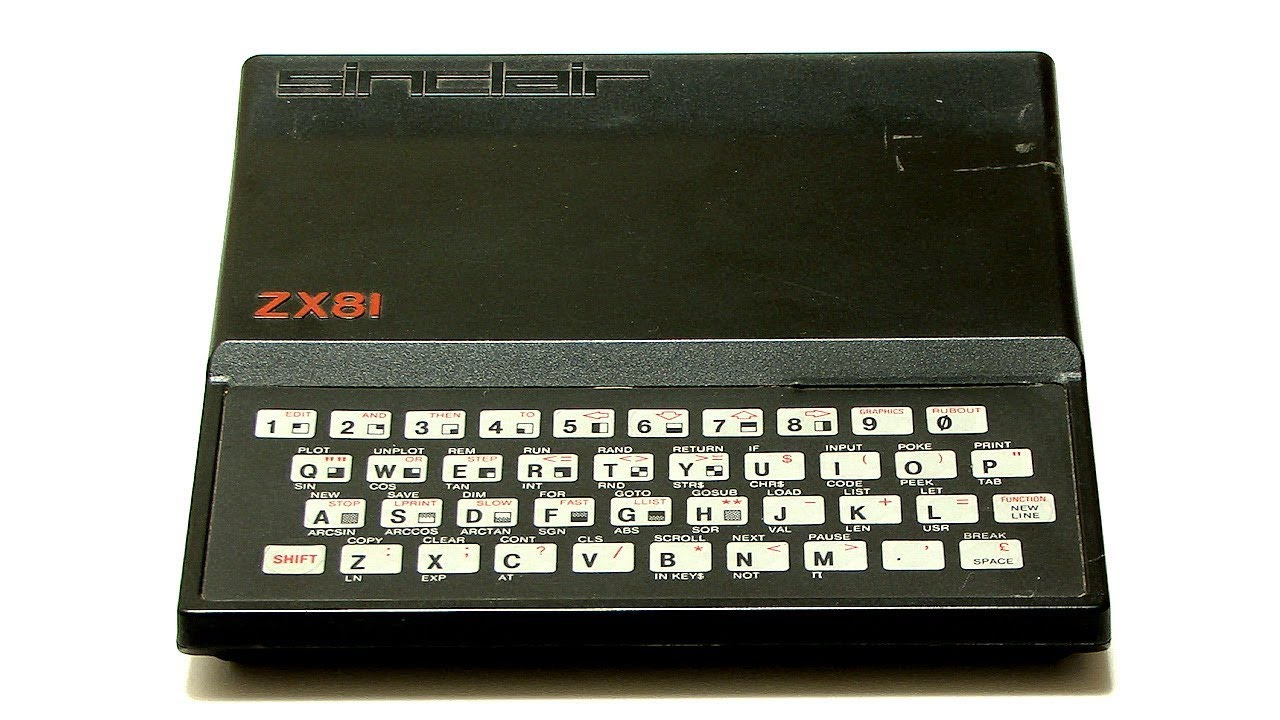

My First Computer - Sinclair ZX81 (1982)

The first computer my family owned was a Sinclair ZX81. It had 1KB of RAM (expandable to 16KB), ran at 3.25MHz clock speed, had 64x44 black-and-white pixel resolution, plugged into the family TV, and used cassette tapes for data storage (roughly 300bps of audio screeches as data loaded).

My first program was to print “Peter” in a continuous loop on the screen.

10 PRINT "PETER"

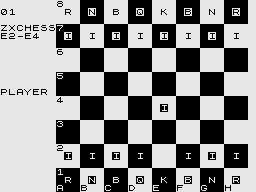

20 GOTO 10I’d seen computers on TV shows, and my Dad’s office had a mainframe of some kind, but this was the first time I could program a computer for myself. I wrote software in BASIC, but also taught myself Z80 machine code to write “high-performance” games. Here’s an example of a chess game that we purchased for the ZX81.

Why was this jaw-dropping? Suddenly the world was a different place for me, and I spent hours each day sitting in front of the keyboard, rather than playing outdoors in the yard. Most families didn’t have a computer until 10 years later, and there was no (public) internet yet. The world was still based on knowledge-sharing via paper (magazines, newspapers), with broadcast radio and TV being our main source of news and entertainment.

Life had changed for me, and was about to change for the rest of the world.

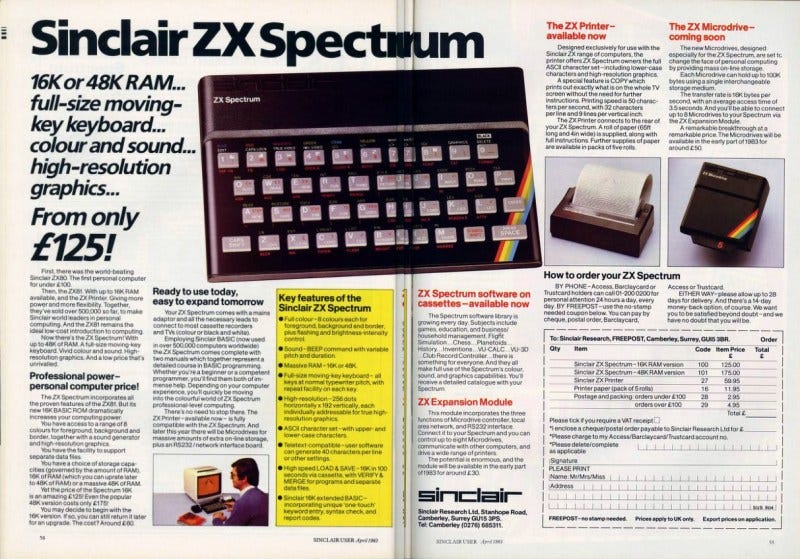

Marketing for my Second Computer (1982-83)

Growing up in New Zealand had only one downside that I cared about - we were so far away from the UK or US that our exposure to new technology was via printed magazines (remember, no public internet yet). These magazines were shipped by sea and often arrived in our shops about 2-3 months after being published. Despite the delay, these were our lifeline for learning about new technology.

My next jaw-dropping experience was seeing the following dual-page advertisement for the ZX Spectrum computer (successor to the ZX81 we already owned).

Yes, that’s correct - it was the glossy advertising that changed my life, not the computer itself. It was the promise of colour graphics, higher-resolution (256 x 192 pixels) and sound/music! For at least six months (until early 1983), I would excitedly read the advertisements, study the magazine’s program listings to learn the new variant of BASIC, and dream about the new capabilities of the ZX Spectrum.

Sure, it was great to eventually own a ZX Spectrum, but the jaw-dropping part came from the magazines themselves.

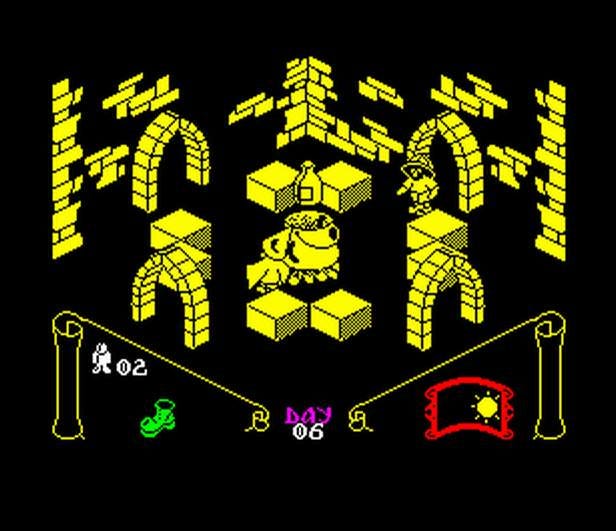

Knight Lore (1984)

The ZX Spectrum was the first computer for many teenagers in the 1980s (at least, in much of Europe, Australia, and New Zealand). Many of those kids are now 50+ years old, and fondly recall the five-minutes of screeching audio as our games loaded off cassette tape. There are even Facebook groups devoted to reminiscing about the good old days.

Knight Lore was a game produced by Ultimate Play the Game, a small UK-based software company. The goal of Knight Lore was to navigate a 3D world, find the ingredients for a magic potion, then place them in the cauldron, all without losing your lives (see walkthrough video).

There were plenty of awesome games that pre-dated Knight Lore, but there was something inspiring about this game. It was a combination of the amazing 3D graphics, the challenging game play, and the marketing. Ultimate Play the Game was known for their full page magazine advertisements providing no detail, other than the game’s title and the company logo. This is what made Knight Lore stand out as jaw-dropping.

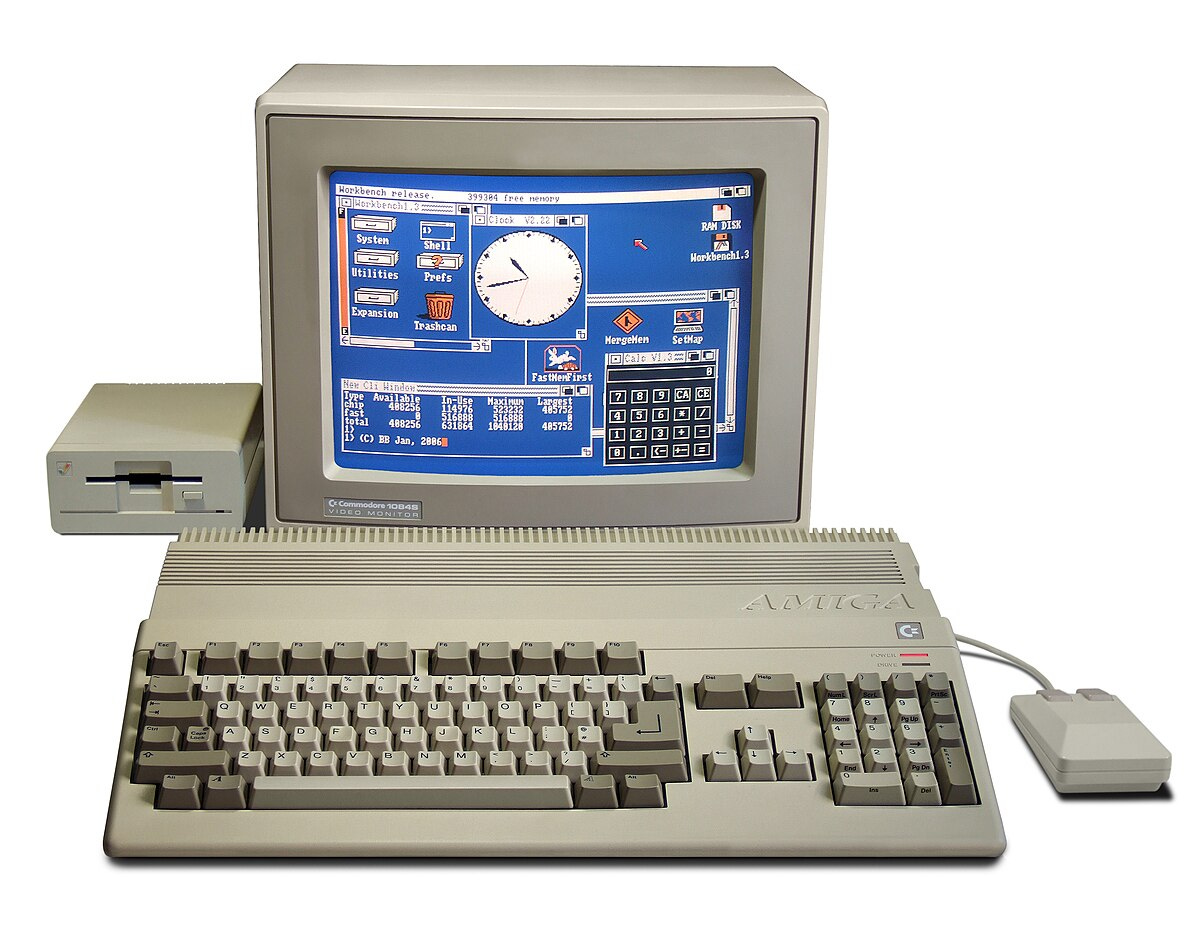

Desktop-Switching on the Commodore Amiga (1985)

The Commodore Amiga was an amazing computer when it jumped onto the market. We were impressed by the quality of graphics producing near-realistic images (4096 colours!) , and the sound was approaching the quality of music on a CD (fairly new at the time). We now had the power of full-sized arcade games in our living room, without inserting 50 cents for each game.

For me, the jaw-dropping experience was quite specific. The Amiga Workbench (desktops, icons, files, windows) allowed you to have multiple desktops open, each with different content and graphics resolution. Amazingly, you could grab the top menu bar of the desktop with your mouse pointer, quickly and smoothly dragging it downward to reveal the desktop behind it, or even a portion of the other desktop. These days, this “multiple desktop switching” feature is a standard part of the MacOS or Windows experience.

When developing games on the ZX Spectrum, I was accustomed to the main CPU writing to display memory. At a rate of 50 times/second (TV refresh rate), you’d erase the previous graphics character (e.g. space invader, monster, game piece), then redraw it at the new location in the display memory, therefore providing the illusion of animation. To implement the smooth scrolling of this "desktop switching” feature, we’d need to copy the entire display memory 50 times/second.

With the Amiga, this was the first time I’d experienced dedicated graphics hardware, and the smooth animation was done via instructions to the Graphics Processing Unit (GPU), rather than the main CPU copying bits of data around. This jaw-dropping experience was my introduction to ASICs (Application-Specific Integrated Circuits).

Seeing Europeans Posting on Usenet (1990)

When I first attended university (1989-1992), we had access to both SunOS and VMS-based computer systems. I had no idea how large they were physically, but at 128MB of RAM, that was impressive. We always logged in remotely from dumb terminals, and knew these servers were somehow connected to the internet - mostly a university-based network at that time.

The jaw-dropping moment was my discovery of the Usenet news service, the primary mechanism for sharing news and discussions on the internet (there was no world-wide web at this time). Being in far-off New Zealand, where magazines arrived by sea, I was amazed to log in and instantly read postings from somebody in Europe, or somebody in the USA. Suddenly the world became a much smaller place, and for the low-low price of $5/MB, I too could communicate with the rest of the world.

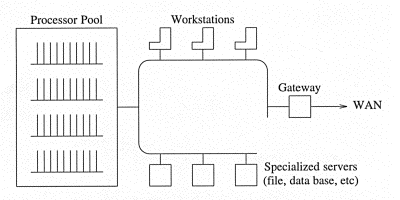

The Amoeba Operating System (1992)

While I was still an undergraduate student, I had the good fortune of beta testing the Amoeba Operating System. This research OS was built ground-up to be fully distributed, using 128-bit unique IDs to identify each resource in the system (e.g. files, devices, and processes). To access a resource, just provide the 128-bit ID and the system would figure out where on the network the resource was. The developer had no idea whether the resource was local to the current host, on some other host across the network, or whether it had recently moved from one place to another. This resource-location approach provided in the appearance of a single large machine, rather than multiple smaller machines connected via a network.

In contrast, I’d already learned about the Unix system, and how it manages resources on a per-host basis. To access resources on a local machine, you’d make a local system call. However, if the resource is on a remote machine, you must explicitly send a TCP or UDP message to the remote host’s unique IP address, where a server process would access the resource for you. In this sense, accessing a local resource (a system call on the current host) versus a remote resource (via a network message) is very different.

Amoeba was an elegant and full-featured operating system that impacted my thinking about system design, and I’m sad it never got the traction it deserved. Although the elegance of the OS design was eye-opening, it did suffer from performance issues, and wasn’t fully compatible with existing Unix software. In the end, it was eclipsed by the Linux system (as a side note, I had the good fortune of reading the famous Tanenbaum-Torvalds debate of Minix vs Linux as it was happening!)

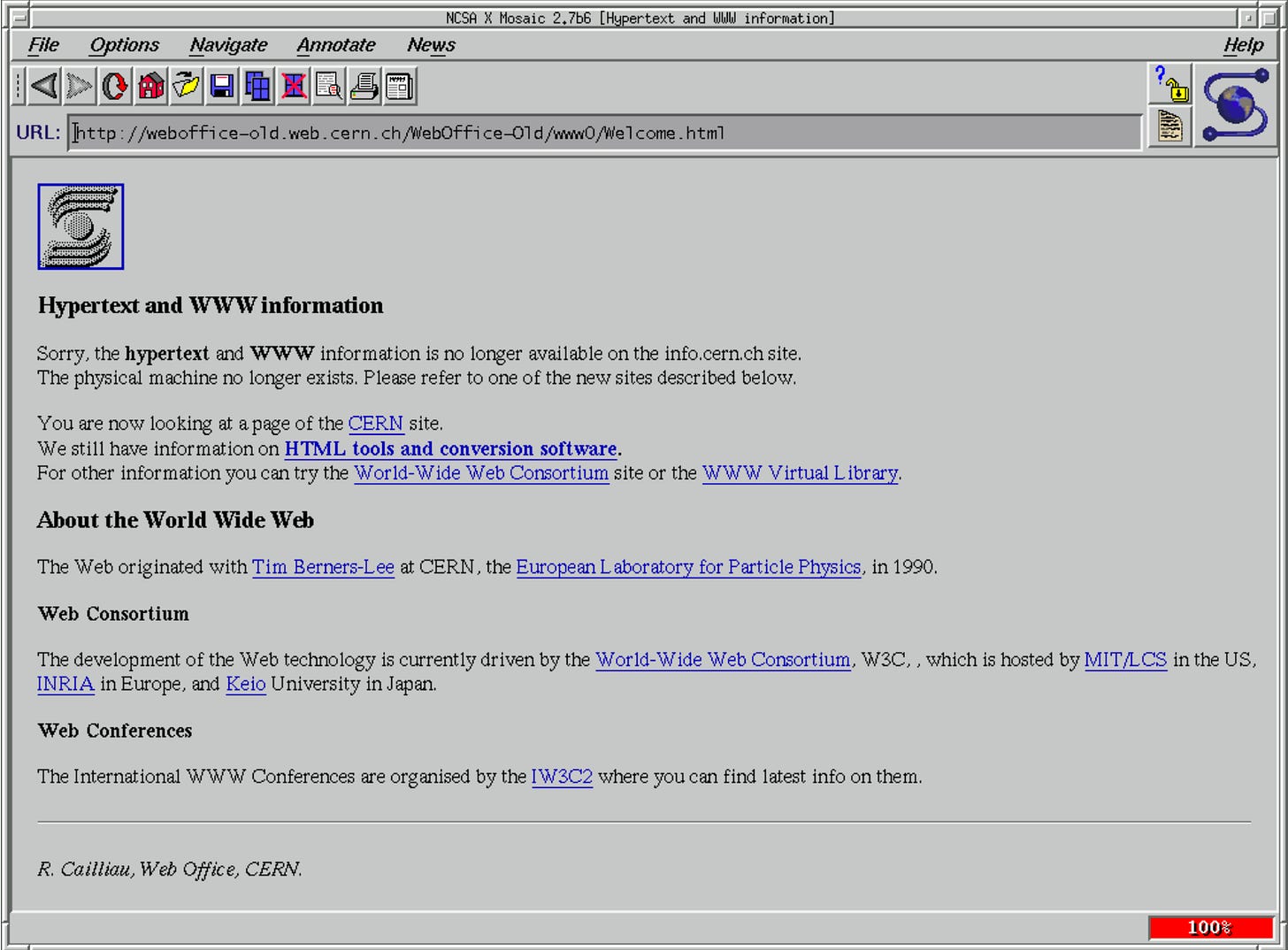

HTML, The WWW and Mosaic Browser (1993)

When I moved to Canada in 1993 to attend graduate school, I was fortunate to have an early glimpse of the WWW (World-Wide Web). My research lab installed the Mosaic Browser on their servers, allowing access to the fledgling web. I recall the NASA web site, some museum sites (perhaps the Smithsonian?), and the Internet Movie Database (IMDB). We also had the ability to create our own personal web sites, using a new description language known as HTML.

The jaw-dropping moment for me was seeing hyperlinking - the ability to click a link on a web page and instantly be transferred to another page, possibly on a completely different web site. Everything on the internet had a unique URL, and I could easily add my own content for others to link to.

It was exciting, but little did we realize the WWW would become synonymous with “the internet”, and that everybody in 2023 would spend so many hours each day surfing the web

The Java Programming Language (1996) and Java Duke

I first heard of the Java programming language at the OOPSLA ‘96 conference. People were raving about this newly-designed language that addressed many of C++’s weaknesses, and incorporated idea from Smalltalk and LISP. For me, it was the first “new” language I’d seen, as languages like BASIC, C, C++, and Pascal, were well-established by the time I learned them.

The jaw-dropping experience was the ability to execute Java code inside somebody else’s web browser (aka Java Applets) running on top of a virtual machine (VM). It was only a couple of years since I’d learned HTML, but now I could run general-purpose program code inside an end-user’s browser. I’d always loved the idea of “remote procedure calls”, but this was going to a new level.

One example was Java Duke, who had previously appeared as a fixed image on a web page. But now, it was possible to animate Duke, and have him actually wave his hand! My jaw dropped when I first saw that particular web page on the Sun Microsystems site! Gone were the days where HTML pages would load once, then remain fixed until the next page load.

Handheld GPS (1998)

The Global Positioning System (GPS) was first launch in 1978, but exclusively for the US military. In the late 1990s it had become accessible to the public, and I purchased a hand-held receiver unit. This was my first exposure to yet another technology that’s now in everyone’s pockets.

My GPS receiver was very simple, taking about five minutes to find your location. The small LCD display listed the satellites it was syncing with, and after a minimum number (I think it was 3?) it would report your longitude and latitude (no map drawing yet). We didn’t yet have Assisted GPS, so the start-up time was still fairly high.

Around that same time, I attend a university lecture given by the Chief Strategy Officer of Sun Microsystems. He presented a wild idea of having GPS in your car, which would direct you to the nearest McDonalds restaurant and provide you with discount coupons. What an awesome futuristic idea!

I recall riding the local buses and trains with my GPS device in hand, taking note of the coordinates of the local landmarks. Sadly that’s as far as I got with that idea, but it was certainly a revolution in the making.

YouTube’s Storage Size (2005)

One of my grad school colleagues did his research on video streaming, so I was no stranger to the topic. I also had plenty of short videos downloaded to my home computer, which were costly to store given their multi-megabyte file size.

What shocked me about YouTube was the magnitude of the storage required. If anybody could create and upload their own videos, possibly 15-30 minutes in duration, I was perplexed by the number of disks required to store all that data! Wouldn’t it be terabytes of new disk space required every hour?

I’m still not clear how they manage all that storage, but I’ve always imagined a convoy of trucks heading toward each data centre, carrying nothing but disk drives. You’d then require dozens of people working around the clock to plug them in, just to keep up with the amount of data being uploaded. It must be more of a problem these days with modern social media and photo applications, constantly uploading new images to the cloud.

A First Glance at Amazon EC2 (2006)

As far back as 1993, I had installed the Linux operating system on numerous hosts, mostly from floppy disk or CD-ROM. The first distribution I used was Slackware, requiring many hours of installation effort, and a lot of manual typing. Years later I purchased a license for Redhat Linux, and received the CD-ROMs in the mail. Even as recently as 2017, I downloaded an Linux ISO file and ran CentOS in a virtual machine on my desktop computer.

When I started using Amazon EC2 in 2014, I had memories of an article I read in Linux Magazine, probably from 2006. The article talked about the new EC2 service from Amazon allowing you to create a Linux VM within minutes. You would ssh to your new VM to perform your work (no direct console access). Not only was installation time impressive, but you were only charged for the number of minutes the server was running, with no upfront hardware setup cost! When you were done, you’d simply delete the VM and no longer be charged.

I didn’t need EC2 at the time, because I already had my Linux hardware configured and “paid for”. It wasn’t until 2013 that I fully appreciated the value of the cloud - I was working for a company that insisted on their own private data centres, literally requiring months of careful project management and cross-team communication to get a new server running. The cloud changed all of that.

Interactivity of Google Maps

Google Maps has improved dramatically over the years, but the one improvement I remember the most is interactive scrolling. Before this feature, you needed to explicitly click the left, right, up, or down button to see the map scroll to a different location, triggering another page load each time. This was typical of web applications at the time.

One day though, I discovered you could simply drag the map left, right, up, or down, and the page would smoothly scroll, with new parts of the map appearing like magic. In fact, you could scroll infinitely in any direction. We’d now reached the time when JavaScript (running in the browser) could dynamically load data, and redraw parts of the canvas, without reloading the full web page.

I’m really not sure why this feature impressed me so much, but for some reason it did. It didn’t fit my mental model of how web browsers work, so this feature caused me to rethink what was possible.

The iPhone (2007)

It’s clear now that the iPhone has changed the world, but it was hardly the first device of its kind. I remember seeing the Apple Newton in 1993, and I personally owned a Palm Pilot in the early 2000s. Both of these devices used a stylus for data input, and had a crude form of handwriting recognition instead of a keyboard. Although some of the later Palm devices had wireless connectivity, they were generally standalone devices.

The iPhone was jaw-dropping for several reasons, but for me it was the use of finger-swiping gestures instead of the stylus (which I often feared losing). I was amazed the first time I saw somebody in a coffee shop making flicking and pinching motions on their phone screen, with the display updating via smooth animation to give immediate feedback on the operation.

IBM Watson (2011)

With all the news about ChatGPT, I would be remiss in mentioning IBM Watson from 2011. I excitedly watched the episode of Jeopardy where a computer faced off against two human contestants, and did extremely well. What boggled my mind was that Watson had so much knowledge about so many things, and was able to interpret English sentences and respond in mere seconds.

Of course, ChatGPT has taken this one step further, being accessible to the general public. But I’ll never forget the first time I saw IBM Watson in action.

This Person Does Not Exist (2020)

The most recent technology that blew my mind was This Person Does Not Exist. You can literally spend hours looking for new friends! Give it a try!